Structured 3D Latents

for Scalable and Versatile 3D Generation

TL;DR: A native 3D generative model built on a unified Structured Latent representation and Rectified Flow Transformers, enabling versatile and high-quality 3D asset creation.

Abstract: We introduce a novel 3D generation method for versatile and high-quality 3D asset creation. The cornerstone is a unified Structured LATent (SLAT) representation which allows decoding to different output formats, such as Radiance Fields, 3D Gaussians, and meshes. This is achieved by integrating a sparsely-populated 3D grid with dense multiview visual features extracted from a powerful vision foundation model, comprehensively capturing both structural (geometry) and textural (appearance) information while maintaining flexibility during decoding.

We employ rectified flow transformers tailored for SLAT as our 3D generation models and train models with up to 2 billion parameters on a large 3D asset dataset of 500K diverse objects. Our model generates high-quality results with text or image conditions, significantly surpassing existing methods, including recent ones at similar scales. We showcase flexible output format selection and local 3D editing capabilities which were not offered by previous models. Code, model, and data will be released.

NOTE: The appearance and geometry shown in this page are rendered from 3D Gaussians and meshes, respectively. GLB files are extracted by baking appearance from 3D Gaussians to meshes.

Generation | Text to 3D Asset

All text prompts are generated by GPT-4.

A Victorian mansion made of stone bricks with ornate trim, bay windows, and a wraparound porch.

Glowing orb on a stone pedestal.

Vintage copper rotary telephone with intricate detailing.

Spherical robot with gold and silver design.

A space colony with domed habitats and connecting tunnels.

Ship with copper and brown hues, intricate deck details.

Futuristic robotic arm on a table..

A stylized, cartoonish rocket with a red dome top and black antenna, teal cylindrical middle section with red bands and black connectors.

Generation | Image to 3D Asset

Image prompts are either generated by DALL-E 3 or extracted from SA-1B.

Editing | Asset Variants

DYM3NSION can generates variants of a given 3D asset coherent with given text prompts.

Rugged, metallic texture with orange and white paint finish, suggesting a durable, industrial feel.

Knitted, fabric-like texture with green and purple colors, featuring playful details.

Rugged, metallic with leather straps and a blue accent, resembling a medieval weapon.

Transparent, glass like structure, suggesting a high tech design.

Rugged, metallic texture with orange and white paint finish, suggesting a durable, industrial feel.

Knitted, fabric-like texture with green and purple colors, featuring playful details.

Rugged, metallic with leather straps and a blue accent, resembling a medieval weapon.

Transparent, glass like structure, suggesting a high tech design.

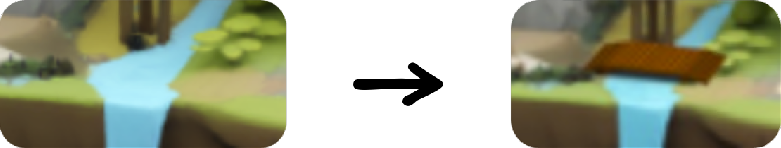

Editing | Local Manipulation

DYM3NSION can manipulate targeted local regions of a given 3D asset according to given text or image prompts.

Floating island with trees and a house.

Floating island with trees and open space.

Floating island with trees, river and waterfall.

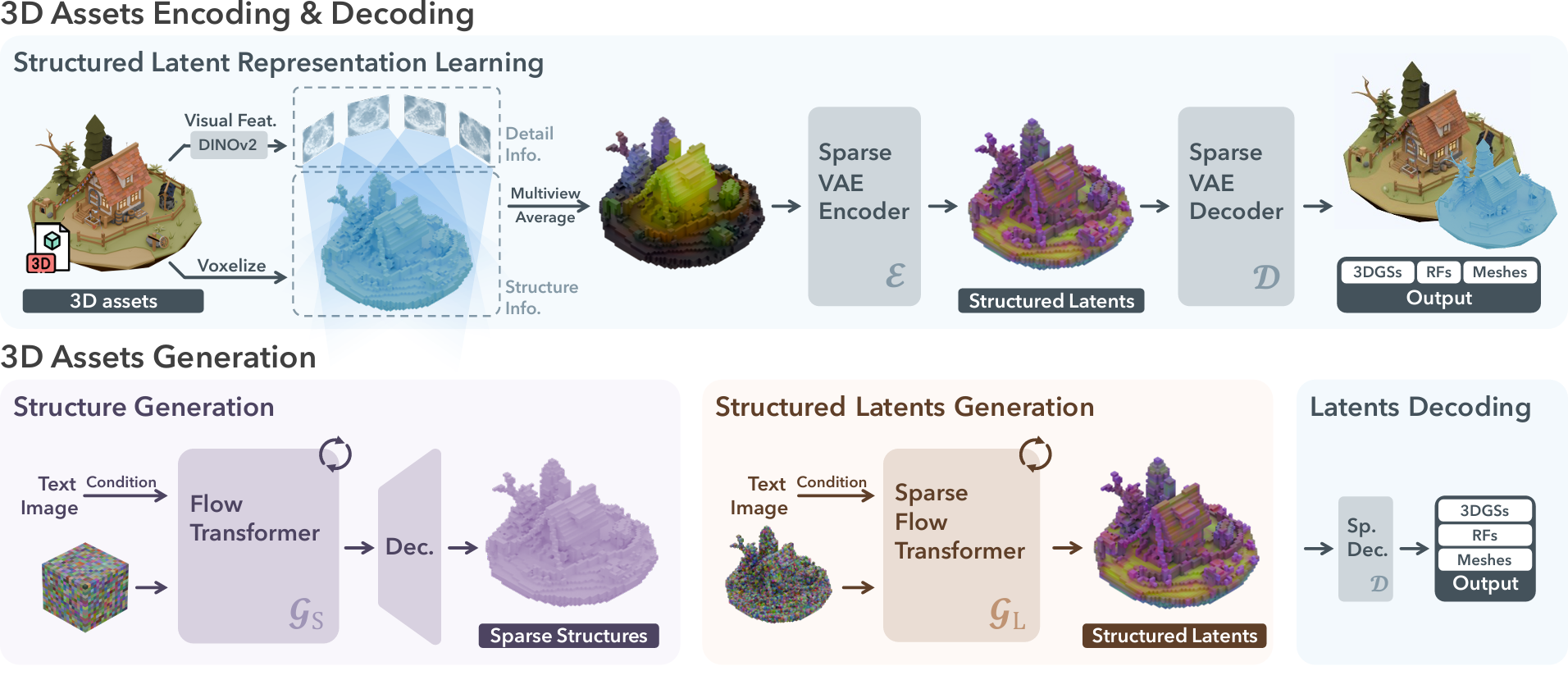

Methodology

We introduce Structured LATents (SLAT), a unified 3D latent representation for high-quality, versatile 3D generation. SLAT marries sparse structures with powerful visual representations. It defines local latents on active voxels intersecting the object’s surface. The local latents are encoded by fusing and processing image features from densely rendered views of the 3D asset, while attaches them onto active voxels. These features, derived from powerful pretrained vision encoders, capture detailed geometric and visual characteristics, complementing the coarse structure provided by the active voxels. Different decoders can then be applied to map SLAT to diverse 3D representations of high quality.

Building on SLAT, we train a family of large 3D generation models, dubbed DYM3NSION, with text prompts or images as conditions. A two stage pipeline is applied which first generates the sparse structure of SLAT, followed by generating the latent vectors for non-empty cells. We employ rectified flow transformers as our backbone models and adapt them properly to handle the sparsity in SLAT. We train dym3nsion with up to 2 billion parameters on a large dataset of carefully-collected 3D assets. DYM3NSION can create high-quality 3D assets with detailed geometry and vivid texture, significantly surpassing previous methods. Moreover, it can easily generate 3D assets with different output formats to meet diverse downstream requirements.